Robo-Ray is the first Samsung humanoid robot. In this project, I involved in architecture design. I implemented a robot simulator which could simulate its intelligent scenario.

Back to Portfolio

Thursday, November 19, 2009

Wednesday, November 18, 2009

Shadow 3D Project

Shadow 3D Project is to develop and make 3D graphics library for Digital TV. I developed Integrated Development Environment such as Scene Editor and Widget Editor. Scene Editor is to construct a scene with various widgets and generate c++ code. Widget Editor is a debug tool to check the widget which is developed with Shadow 3D.

Back to Portfolio

Back to Portfolio

Sunday, November 15, 2009

MutiCube Project

This project was the first asynchronous File Transition Layer(FTL) for a flash memory. It was composed with Sector Translation Layer(STL), Block Management Layer(BML), and Low-Level Device diver layer(LLD). In this project, I was responsible for developing Block Management Layer(BML). We had to come up with a new algorithm and implemented it.

Back to Portfolio

Back to Portfolio

DSP Project

Samsung Electronics needed to reduce efforts and cost of developing software for various Digital TV models. My team decided to develop a reusable and OS Independent software platform. It was Digital TV Software Platform(DSP). With this platform, we could save time and cost of producing Digital TV. In this project, I implemented OS Abstraction Layer(OSAL) and two applications such as AnyNet and EPG .

Back to Portfolio

Back to Portfolio

SHADOW Project

This project was to develop a 2D graphic library for linux, windows, and VxWorks. It was used to many Digital TV models and made huge profit in Samsung Electronics.

Back to Portfolio

Back to Portfolio

Thursday, April 30, 2009

TAME Project

The TAME (Traits, Attitudes, Moods and Emotions) Project focuses on adding complex affective state to a humanoid robot for assisting in the generation of long-term human-robot attachment. TAME is a time-varying affective model that ranges over the complete affective space of an agent. As such it is highly generative, and can produce a wide range of interactive capabilities through a robotic system in support of long-term human-robot interaction. In this project, we plan to extend and develop the TAME time-varying affective control model for use in humanoid robotic systems. Specifically, we will provide a MissionLab implementation of TAME for the demonstration the ability to convey long-term interactive affective phenomena to human observers. I designed a software architecture for TAME which can operate independently and be configurable without recompile it. The affective component was applied to a humanoid robot, Nao.

The Configuration of the Experimental System

Nao's Emotional Gestures and Eye Colors according to Basic Emotions

Back to Portfolio

Tuesday, February 17, 2009

Biped Robot Design

I tried to make a biped robot. Before we make a hardware platform, we simulate what we want to make using a CAE program, ADAMS. Various behaviors were tested and got torque information of each joint. Then, we choose the best motor for this biped robot and design the biped robot with the selected motor. The hardware platform is not made yet.

Thursday, February 12, 2009

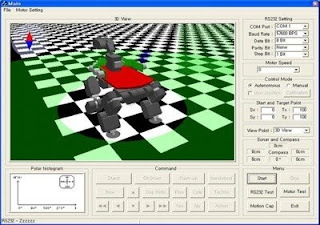

Robot Intelligence Development Environment (RIDE2)

This is RIDE 2 which can simulate robots in virtual world. I use Irrlicht, a graphics library and Bullet, a physics engine. RIDE2 will be composed with a simulator, an editor and a behavior generator. Currently, the editor is implemented. The others will be substantiated.

RIDE2 Simulator

RIDE2 Editor

Vision Recognition using Backpropagation

I tried to participate in a contest which competes with others about the ability to find the shortest path in a maze. In the contest, attendant had to pass 5 points and to recognize traffic signals. To solve the problem, I suggested ideas in order to recognize traffic signals and to plan the shortest path via five points. The ideas were that I used Back-propagation to recognize traffic signals and to find the short cut to a goal, Genetic algorithm and A* algorithm were applied.

Add Training Data and Test Trained BP with Sample Images

Back to Portfolio

Tuesday, February 10, 2009

Control Oil Pressure Actuators

[2001 ~ 2002] Control One and Two Axis Oil Pressure Actuators with DSP

Wednesday, January 28, 2009

Projects have been done in Samsung

[2004] SHADOW Project - Graphics Library for Digital TV

[2005 ~ 2006] DSP Project - Digital TV Software Platform

[2007] MultiCube Project - Asynchronous File System for Flash Memory

[2008 ~ ] Robo-Ray Project - Software Platform for Humanoid Robots

[2009 ~ ] TAME Project - An Affective Component for Robots and CE Devices

Back to Portfolio

Tuesday, January 27, 2009

Q-Learning

I applied Q-Learning to path planning and Hanoi Tower. Q-Learning is more clear than Back-Propagation and easier to use compared with other machine learning algorithms.

Back to Portfolio

A* Algorithm

I made a program which could test the A* algorithm. You can build a map and test your own A* algorithm : you can change hueristic equation and add the smoothing algorithm and so on.

I made a program which could test the A* algorithm. You can build a map and test your own A* algorithm : you can change hueristic equation and add the smoothing algorithm and so on. Back to Portfolio

Machine Learning and Algrorithm

I used Back-propagation for recognizing objects such as sphere and cube. First, this program trained with desired images. Then, it read images from a camera and analyzed it based on the trained weight functions. Moreover, I implemented a back-propagation program.

This program is Self-Organizing Map(SOM). I made this program for categorizing data.

Q-Learning

This program is a back-propagation training progam. You can add layers and nodes, train your own data, and change some parameters such as steepness, momentum and learning rate. Finally, you can save the result of training to a file. I applied Q-Learning to path planning and Hanoi Tower. Q-Learning is more clear than Back-Propagation and easier to use compared with other machine learning algorithms.

A* Algorithm

I made a program which could test the A* algorithm. You can build a map and test your own A* algorithm: you can change hueristic equations and add the smoothing algorithm and so on.

Vector Field Histogram(VFH) Simulator

This simulator can test VFH in various environments and make your own environment using a map editor. I made this simulator and tested my navigation algorithm in many cases before testing the algorithms in a real robot. OpenGL was used to construct 3D environments.

VFH Simulator Architecture

VFH Simulator Architecture

VFH Simulator Map Editor

Friday, January 23, 2009

Samsung Software Membership Projects (SSM)

Home Networking

X-Men Project

.jpg)

Goliath Project

This project was to monitor a house in the Internet. A user could access the camera and get various information of a house on a homepage which we made. This was my first Software Member Ship project.

X-Men Project

In this project, my part was to implement a navigation program. I applied HIMM and VFH to an omni wheel robot and it could send camera images to a PDA of an user and the user could control the robot with the PDA.

.jpg)

Goliath Project

My team designed this robot's appearance and made it. My part was a navigation program and a behavior generation program. I used A* algorithm and Histogram In Motion Mapping(HIMM) for navigation. We could generate a variety of behaviors with the behavior generation program. .jpg)

.jpg)

Mobile Robot

I implemented a program which could build a map and avoid obstacles by gathering distance data from a robot. Moreover, I make the mobile robot and used PID algorithm to control a DC motor of a RC car.

Spec : 8 Ultrasonic Sensors, 1 Digital Compass, 1 DC Motor, PIC MCU

Back to Portfolio

Spec : 8 Ultrasonic Sensors, 1 Digital Compass, 1 DC Motor, PIC MCU

Back to Portfolio

Robot Intelligence Development Environment(RIDE)

I developed a robot simulator, RIDE. RIDE presents a simple and efficient method for modeling a robot cognition system using synthetic vision in virtual environments. Virtual robots perceive external stimuli through a synthetic vision system and use the perceived data for navigation and action. I made my own graphics engine with OpenGL and used Open Dynamics Engine(ODE). RIDE is composed of two things, RIDE Editor and RIDE Simulator.

RIDE Architecture

Robots and Environments

Face Expression

Goal Driven Agent Test

Back to Portfolio

Eyebot

EyeBot was developed at he University of Western Australia. The robot has differential steering, three infrared position sensitive devices (PSDs), a color CMOS camera, a digital compass, and a wireless communication module. The three distance data values from the PSDs and the robot's current location (delivered by shaft encoders) are sent to the host computer to update the map-building with HIMM in real-time. A new path is generated using the A* algorithm and transmitted to the robot. 3D map building is also constructed with distance data and the current robot position.

Architecture

Robot Control Program

Go to a destination in a unknown environment

.jpg) Eyebot

EyebotHaptic Device

This was a haptic device, detecting the status of your hands. I used neural network algorithm for recognizing the status of a hand. If you express a gesture with this glove, the control program can recognize the gesture, but desired gestures must be trained before using this haptic device.

Spec : 5 Flex Sensors, PIC MCU, 1 Bluetooth

Spec : 5 Flex Sensors, PIC MCU, 1 Bluetooth

Architecture

Four Legged Robot

I made a four legged robot. It could walk, build a map and avoid obstacles. Histogram In Motion Mapping(HIMM) and Vector Field Histogram(VFH) were used for navigation and I implemented the control program which could build the 3D map of environment, generate behaviors of the robot and test the algorithms of map-building and obstacle avoidance.

Spec : 14DOF, AI Motor, 1 LCD, PIC MCU, 5 Ultrasonic Sensors, 1 Bluetooth, 1 Touch Sensor

I won several contests in Korea with this robot.

Architecture of Four Legged Robot

Subscribe to:

Posts (Atom)

.jpg)

.jpg)

.jpg)